Responsive, Formative Evaluation: A Flexible Means for Improving Distance Learning Materials |

A vital element in the development of flexibly designed distance learning materials is the use of systematic, formative evaluation. With the initial implemen tation of the materials, a concurrent evaluation will provide feedback which can be used to improve those materials. Such a process is currently being undertaken at Universiti Sains Malaysia (USM) as part of a major redevelopment of its distance education program. New learning materials undergo evaluation prior to revision and subsequent fixed publication. Experience at USM has revealed certain methodological problems with designing a formative evaluation of distance learning materials. The principal problem is the difficulty of obtaining detailed user data and of deciding upon suitable evaluation criteria. The design developed at USM consequently emphasizes flexibility in the source, timing and methods of data collection, and in interpretation and reporting, given that the purpose of the evaluation is to provide meaningful feedback to course developers.

Un des élements primordiaux dans l’élaboration de matériaux flexibles d’enseignement à distance est l’emploi d’évaluations systématiques et for matrices. Une évaluation, tenue simultanement avec la mise en application initiale des matériaux, provoquera des réactions qui pourront éventuellement servir à l’amélioration de ces derniers. Ce processus est présentement appliqué à “Univer siti Sains Malaysia” (USM) et fait partie de la réorganisation majeure de son programme d’enseignement à distance. Les nouveaux matériaux d’apprentissage sont soumis à une évaluation avant d’être revus et subséquemment publiés.

L’expérience de l’USM a révele certains problèmes méthodologiques dans l’élaboration d’évaluations formatrices des matériaux d’enseignement à distance. Les problèmes majeurs sont constitués par la difficulté d’obtenir des informations detaillées de la part des utilisateurs et celle de décider des critères adéquats d’évaluation. Le modèle développe è l’USM met, en consequénce, I’emphase sur la flexibilite de la provenancé, du rythme et des méthodes de recueil des informations aussi bien que sur l’interprétation et le compte rendu, le but des évaluations demeurant de fournir des renseignements significatifs aux responsable de lelaboration des cours.

The most effective distance learning materials are likely to be those which have built-in flexibility. They reflect an underlying philosophy of catering for individ ual learning needs. Development of such materials will probably only be achieved after several stages of development, review, and refinement. This process will be characterized by flexibility and responsiveness on the part of decision makers; that is, the course developers (writers) responding positively to suggestions for materials improvement.

A variety of strategies can be and are applied to effect materials improvement. Critical analysis and review during initial development, conducted by peers, both subject experts and instructional experts, is most commonly practiced. Develop mental (or pre) testing as conducted by the Open University, United Kingdom (U.K.) can be an effective strategy. Many institutions, however, do not have the time or resources to pre-test their materials. They do, of course, resort to a variety of critical analysis techniques, but once the materials are released they tend to stay unchanged for a relatively long time. An effective and inexpensive strategy is needed to obtain the type of information on which genuine improvement of materials can be based.

Systematic formative evaluation can fulfill a valuable role in the distance learning materials development process. An on-going, broadly based evaluation conducted simultaneously with materials development will result in meaningful, value-laden feedback that can be assessed and acted upon by the materials developers. The longer term quality of the materials will reflect the appropriate ness of the evaluation and most importantly, the flexible, responsive attitude of the decision makers responsible for improving those materials.

Universiti Sains Malaysia (USM) is the only university in Malaysia which offers degree courses by the off-campus or distance learning mode. The univer sity’s Off Campus Centre is currently implementing a major redevelopme~t of its distance courses. All courses are being converted to a modularized, self-instruc tional format. Previously the course materials consisted almost entirely of lec turer-prepared course notes. Now the emphasis is upon developing materials which are interactive with students, containing activities and self-assessment questions which are integrated with text that is more instructional in style rather than merely being factually or content based. As USM’s Off Campus Program spans five levels, with the final or sixth level being fulltime on-campus, it will take USM five years to introduce its new materials. The development plan for each off campus course consists of a 3 + 5 year time frame. The intention is that a course will take three years to reach fixed publishable standard, after which it will remain unaltered for a further five years.

Under USM’s scheme, each new course will have its materials evaluated during its first year of implementation. USM’s Off Campus Program runs on a relatively low budget. It does not have sufficient resources to subject new course materials to pre-course developmental testing as conducted at the Open University, U.K. (Henderson, Kirkwood, Mayor, Chambers, & Lefrere, 1983). As a result of the first phase of evaluation some courses will be designated as edisi awal, which means “limited edition.” Thus identified, the writers of these courses (with assistance from instructional designers, editors, and media specialists) are expected to refine their materials further over the next two years to bring the materials to a final publishable standard. Remaining courses will still be endeav ouring to attain the edisi awal classification.

Evaluation will play a significant role within the 3 + 5 and edisi awal scheme. The principal evaluation is the formative evaluation conducted during the first year of implementation of new course materials. This is a sustained, systematic evaluation. Once a course has been designated edisi awal, its writer and other members of the course team are expected to continue evaluating the materials but on a less formal and systematic basis. Similarly, those courses which fail to achieve edisi awal in their first year are expected to undergo further but less formal evaluation of their materials. At the present time there is no plan to conduct summative evaluation of published materials when they near the end of their five year shelf life. At the moment, USM Off Campus staff are more concerned with getting materials to publishable standard. Once this has been achieved, undoubt edly a plan for summative evaluation will be conceived. Thus, the present role of evaluation within the redevelopment program for USM’s off-campus materials is of a formative, systematic evaluation conducted concurrently with the first implementation of the new materials.

The OffCampus Centre at USM has appointed an evaluation team to conduct its evaluation of leaming materials. The team comprises three members of the Off Campus Centre and four academic staff from the School of Education. The Off Campus representatives are the senior academic in charge of course materials production, an instructional designer, and an administrative officer. The education lecturers are volunteers, all of whom are experienced in evaluation. There are no Off Campus course writers on the team.

The principal objective for the USM evaluation is to provide meaningful feedback to course writers who can subsequently use this information to improve their course materials.

Secondary or enabling objectives are as follows:

The design for the USM evaluation is essentially eclectic, but to some extent is based on Parlett and Hamilton’s Illuminative Evaluation model (1972) and Stake’s Transactional or Responsive model (1967). It fits, therefore, into what is com monly referred to as the social-anthropological paradigm of evaluation models. Its approach and methodology is largely designed to obtain a qualitative view on course materials. Such an approach seems more appropriate for the purpose of the USM evaluation because the principal objective is to provide feedback to course developers about their materials. Alistair Morgan (1984) from the Study Methods Group at the Open University, U.K., states that “there is a trend towards qualitative methodologies in educational research and evaluation so as to increase the relevance of findings to teachers and course designers” (p. 254). McCormick (1976) also notes that “hard” data evaluations are insufficient to advise on the type of changes required to improve learning materials. This is not to deny the importance of such techniques or the value of quantitative data; on the contrary, in this evaluation design certain forms of “hard” data are sought. However, these are used only to assist in the qualitative interpretation of the value of the materials.

There are specific factors which must be considered when designing an evaluation of distance learning materials; these factors are not commonly found within other educational contexts. Briefly, the factors to be considered are as follows:

If off-campus students do well in a course it does not necessarily follow that the course materials are good. The corollary also applies. If students perform poorly it does not necessarily follow that the materials are inadequate. The types of evaluation modds and approaches which are commonly used in an on campus situation may not be so directly applicable or suitable for an off-campus materials evaluation.

Another problem in trying to design a distance learning materials evaluation is that there is a lack of directly related published literature which can be drawn upon for reference. Feasley (1983) states that very little was published in this area prior to 1980. Published evaluation studies in distance education tend to deal more with courses than materials. They focus on such issues as student attrition (in particular), support services, and learning problems. When materials are included in course evaluations it is usually part of a general retrospective assessment of the course and its resources. The evaluation then usually lacks detail and is not recent enough to be reliable as a basis for improving the materials.

The overall approach planned for the USM evaluation consists of obtaining detailed value perceptions or judgements from the persons who developed the materials and the users, most particularly the students. The intention is to compare these value judgements in a systematic and illuminative way so that the writers can form their own conclusions about the worth of the materials and how these can be improved.

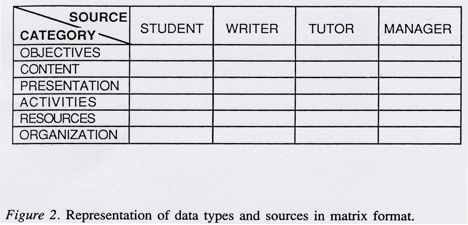

The characteristics or components of the course materials are categorized into objectives, content, presentation, activities, resources, and organization. All infor mation obtained from respondents about any course is classified according to these headings. “Objectives” refers to the stated and/or implied objectives in the mate rials. “Content” refers to the actual subject matter covered. “Presentation” is the instructional style and expression of the content. “Activities” refers to the student exercises and learning tasks that are prescribed in the materials. “Resources” are the additional learning aids such as slides, audio cassettes, and selected readings. “Organization” refers to the way in which all the course materials are arranged to form a comprehensive learning package. By examining the materials through these categories, a more systematic qualitative picture is obtained.

Information or value perspectives about the quality of the materials are obtained from the developers (writers) and the users (principally students, but also tutors and managers). The writers’ perceptions are sought as to what they think they have achieved in writing the materials and what they expect to be the effectiveness of their materials. The students’ views are sought so that these can be compared with the writers’ views. Managers and tutors represent somewhat different perspec tives. Information is sought from the regional tutors, not for the sake of their views on the quality of the materials, but instead, for their observations of how the students have used the materials. Managers in the USM system are academic staff who mark external students’ assignments. In some cases the manager is the person who wrote the course; in other cases this is not so. Information is sought from these non-writer managers to see what they think of the materials and also to include their comments on how well they think students have coped.

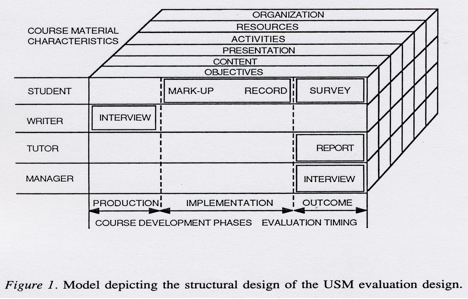

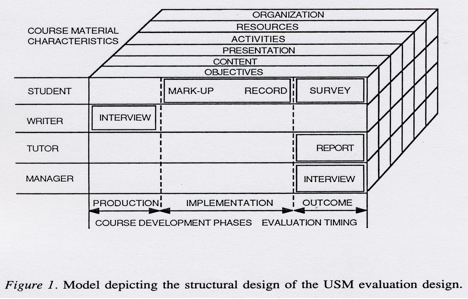

The overall time for the collection of formative evaluation data is approx- imately one year. This occurs in concert with the three phases of course production and delivery (see Figure 1):

The main central panel of Figure 1 indicates how information is collected in relation to the source and timing.

At the end of course production, information is collected from the writers by means of a semi-structured interview. These interviews are conducted by mem bers of the evaluation team. Interviewers ensure that they create an informal relaxed atmosphere while keeping to the broad categories into which course materials information is placed. The interviews are recorded on audiocassettes and later transcribed and summarized onto summary record sheets (computer filed).

During the implementation phase a sample size of approximately 10% of the students in each course (at USM th~s is usually between ten and twelve students) provide on-going, highly specific feedback on the quality of their materials. These students are randomly selected and instructed to make specific (standardized) marking symbols in their course materials wherever they experience any difficulty or wish to comment. After completing a unit of work (about 35 study hours), the student completes a record chart listing all the difficulties experienced in that unit as a result of perceived weaknesses in the materials. These record charts are returned to the evaluation team for processing. At the completion of the course the students’ marked-up units are also submitted to the evaluation team so that the markings can be noted and processed. The marked units are returned to the students along with a completely clean set of course materials.

At the final or outcome stage, several information collection strategies are applied. A general survey questionnaire is administered to all students who complete the course. A semantic differential format has been chosen so that the students can quickly and simply provide an extensive range of value judgements about their course materials. Although various ideas were considered by the evaluation team for administering the questionnaire, the team decided to conduct it as a single round mailed survey of students.

The tutors based in regional study centers submit an open report on their perceptions of how well their students used the course materials. The tutors’ reports follow the general categories devised for classifying the course materials’ characteristics .

The non-writer managers are interviewed after the students’ assessments have been completed for the course. As with the earlier interviews of writers, these post-course manager interviews are semi- structured and outwardly informal. The interviews are similarly recorded, transcribed, and subsequently summarized.

Summaries are made of all descriptive reports and are subsequently computer filed. Similarly, the student marked-up records are also computer coded and filed. The general survey-questionnaire is fully computer coded so that the resultant data can be easily stored on file.

The evaluation team deliberated over whether to use microcomputers or a main frame. Although the computer applications which would normally be generated from this evaluation are reasonably simple, it was decided to use a main-frame from the outset. This enables more in-depth analysis to be made of data, particularly if at any stage quantitative analysis is required for larger scale data, such as those derived from the general survey.

The role of the evaluators is to collect, process, and interpret the evaluative judgements of others, not to pass judgement themselves. Their essential task in processing the information is to make sense of it so that a more complete picture can be seen when the various value perspectives are compared. Figure 2 illustrates in a simplified way how the processing can be visualized with all information for each course filed under its data source and according to the category of its course characteristics. A matrix approach is used to interpret the data. By adopting the matrix system and with the aid of a computer, the evaluators can retrieve data from any category or source and compare it with any other category or source. In doing so, the evaluators are looking for meaningful understanding. They are searching for points of congruence or discrepancy between the various value judgements.

Given their role as described above, the evaluators report their findings to their selected audiences in a descriptive form but without passing judgement or making recommendations .

The principal audience is the course writers. It is their responsibility to act on feedback received about their course materials and to revise those materials as they deem appropriate. The evaluators write reports which indicate their collective interpretation of the various value judgements given for each course. The inter pretation is supported by paraphrasing or quoting specific examples or evidence taken from the collected data. Because the evaluators’ reports can only be summaries, all data relevant to a course are made available to the writer(s) of that course.

Appropriately written reports are prepared for other specific audiences, such as the students, tutors, managers, Off Campus production and resource staff, as well as various branches of the university. All reports are intended to be an accurate portrayal of those aspects of the evaluation relevant to each specific audience.

One of the major attributes of the USM design is that it is responsive in a systematic way to the views and judgements of those persons who are most closely connected with the course materials either in producing or using them. The evaluation team, by processing the judgements of others rather than passing judgement, is able to operate in a non-threatening manner, particularly to the writers. Kirkup (1981) in her study of an Open University, U.K. Foundation Technology course, points out the reluctance of revision authors to accept recommendations made directly by evaluators.

The USM evaluators avoid being prescriptive. Consequently, their reports are more readily accepted and are seen as a more comprehensive portrayal of the value of each set of course materials. The USM evaluation has been designed to be flexible—flexible for the informants, so that they will provide information in a way and at a time which is convenient for them and flexible for the evaluators, so that they can adjust their methods and timing as appropriate. Referring back to Figure 1, the methods and timing for data collection as shown in the central panel can be altered according to circumstance. The important factor to consider is the overall net effectiveness of the evaluation strategies. For an evaluation of this type to be successful and to be sustained it must be adaptable because, even with the most thorough planning, circumstances are never quite as expected when trying to evaluate so many different sets of course materials.

At USM the evaluation team (of seven) has been able to reach consensus on its role and to change the format, scope, and timing of its evaluation strategies accordingly as the need arises.

The variety and timing of the evaluation strategies deployed by USM contribute to the reliability and validity of its evaluation. This is especially important in a qualitative study, for such studies are often accused of being too subjective. The USM design does not rely on any one data collection instrument. Each instrument contributes to the overall completeness of the results. For example, the general survey of students in each course conducted at the end of the academic year is obviously limited in the depth of understanding it can provide. This limitation, however, is offset by the highly specific data collected from the samples of students who mark-up their materials and maintain constant records of problems theY experience within each unit.

Often, when students have completed a course, the things which annoyed them previously are no longer important and so they tend to respond mildly on retrospective surveys. The USM design is meant to capture spontaneous judge ments as they are made. For this reason the students’ marking-up of their units and recording of difficulties while they study their course are probably the most valuable data collected in the evaluation.

The systematic processing of the qualitative data is regarded by the evaluation team as another major strength of its design. Categorization of the course materials’ characteristics, and the subsequent use of the interpreting matrix, ensure that the materials undergo comprehensive and purposeful scrutiny. The matrix, in particular, enables the evaluators to focus on specific aspects of the materials and to make meaningful interpretations of the complexity of opinions relating to those aspects. The matrix processing method gives structure to the qualitative data so that they can be conveyed in a form which the writers are more likely to accept. Chambers (in Henderson et al., 1983) states that writers under standably need to be convinced that they should revise their materials. The USM system allows the evaluator to transform a mass of subjective information into a systematic analysis that conveys insightful meaning to the writers. The informa tion is organized and presented in a way that cannot easily be dismissed by reluctant writers.

Contemporary approaches to educational evaluation particularly stress the importance of teachers (distance education writers are teachers) being directly involved in the evaluation of their own courses and materials. Contrary to this strong opinion, the USM design does not include course writers in its evaluation team and it regards this omission as one of the strengths of its design. Distance educators are well aware that course writing is laboriously time-consuming and to some extent, uninteresting. In many institutions it is difficult to get writers to produce the required course by the prescribed deadline. To involve them directly in evaluating as well as revising their materials is to place large and probably excessive demands upon them. Furthermore, as Scanlon states, “Authors find it difficult to be objective about evaluative comment on their work” (1981, p. 171).

Consequently, at USM it was decided to form an evaluation team of persons who are involved in off-campus materials production but who are not authors. Members of the evaluation team perform their role from a position of “insider/ outsider.” According to Chafin (1981), this is a position in which the evaluator is seen as part of the program but is regarded as sufficiently removed from its intricacies to be objective. The belief within the Off Campus Program at USM is that course writers have enough to do in writing their courses and remaining committed to revising them. If they were also required to evaluate their courses as part of a formal program this task might never be completed. The USM plan is meant to ensure that a sustained commitment to course materials evaluation is made while cooperation from authors is secured for longer term materials improvement.

A further strength of the USM plan is that it does not purport to be the total evaluation for course materials improvement. It is one part, albeit a substantial part, of the improvement process. During the initial production of the materials, the writer is assisted by an instructional designer, content specialist, and language editor. Each of these persons acts as an expert in the field to assist with the refinement of the materials before their first release for student use. In addition, writers are encouraged to conduct self-evaluation by communicating directly with students and seeking various forms of feedback. Writers also have access to examiners’ reports on student achievement in all courses, although inferences drawn from these results about the value of the distance learning materials should be very guarded. The formal evaluation conducted by the specialist team, how ever, is recognized as the major contributor of data which can lead to the revision of materials.

A major limitation of the USM design is that the plan has no formula for action on the part of the writers. If writers ignore the evaluation information then the evaluation is unsuccessful. At a later stage it may be seen as preferable for the evaluators to be prescriptive in making recommendations and to include writers as members of the evaluation team. The plan is flexible: if after experience such changes seem desirable, they can be accommodated. The context and institutional attitudes will determine the most appropriate formula for improving course materials. This formula will probably evolve after several evaluation experiments.

The evaluation also is time-consuming. This is a common criticism of such qualitative methodologies. It is always questionable whether the time consumed and the drain on human resources is warranted in light of expected or actual outcomes. It may be that at USM, despite all the evaluation effort, very little revision is made by authors to their course materials. The same net result could be produced from a lesser or different evaluation effort.

More efficient methods of recording and processing data may be necessary because the amount of subjective data can become unwieldy. Further experience may lead to moves to simplify the processing by classifying and coding data rather than entering descriptive versions. If an alteration is made to the plan to reduce the amount of subjective data collected, then the effects of such an action would need to be considered particularly carefully. Nathenson and Henderson (1980), com menting on the value of student feedback to Open University, U.K. courses, state that open-ended data are more useful in drawing conclusions about necessary revisions. They are, though, time consuming to collect, process, and interpret. The results should warrant the effort.

The contribution of the tutors and managers to the evaluation may be found to be too limited. At various stages during the planning of the evaluation, considera tion was given to seeking more detailed information from these two sources using more exacting collection methods. The evaluation team considered whether it should survey all tutors or interview a select sample and then survey the rest. They also considered an idea of interviewing all managers (including manager-writers) during the implementation phase (in addition to the outcome phase) of the materials used by the students. The decision not to adopt these methods may prove to have been a mistake. However, while these approaches may be suitable, they could not be accepted into the USM design for reasons of time and human resource availability. As a consequence, the plan as illustrated in Figure I is directed more toward the collection of summative or retrospective feedback data. Further experience at USM will indicate whether or not more information ought to be obtained from the tutors and/or managers.

The limited use of student achievement data in this evaluation may be seen by some critics as a weakness in the design. This view is unlikely to come from the evaluation team, who are convinced that detailed analysis of student performance is not necessary for an evaluation of distance learning materials. Achievement data may become necessary at USM, though, as part of the strategy to induce writers to improve their materials. It should be remembered that under the overall USM evaluation plans, writers can obtain examiners’ reports and make their own judgements of students’ results in relation to the course materials. The evaluation team believes that writers should make themselves aware of student performance on their courses but, as this is unlikely to be done in a systematic way, the evaluators may subsequently feel obliged to provide the data.

Development of the evaluation plan did not commence until long after the introduction of new off-campus course materials. By the time the evaluation team convened in August 1984, the new Stage I courses had already been used for one academic year (July 1983-June 1984) and students had just begun to study at Stage 2 level. Naturally, it has taken the evaluation team a substantial time to reach concensus on its plan and to develop data collection instruments. The effect of these delays is that the 3 + 5 materials development model has not been given the opportunity to work as planned. By the time evaluators can provide feedback to writers of Stage I courses, the materials would have been used for two full academic years without revision. Consequently, it is virtually impossible to have any Stage I materials revised for full publication after three years of formative development. No materials have yet been classified as edisi awal (limited edition). This is the intermediate step which can only come after formative evaluation is completed.

Despite the delays, the evaluation is in process. The two main data collection instruments, namely, the general student survey-questionnaire and mark-up record charts have been prepared, tested, and distributed. During the 1984-85 academic year, not only was the evaluation team formed and the evaluation planned, but also evaluation of both Stage I and 2 materials was currently being undertaken. Because of the unavoidable delays, a more than desirable amount of feedback from Stage I and 2 materials will necessily be retrospective. The feedback should still be informative, however. If the required level of detail is not provided by any of the course student samples, then the procedure will be repeated with a new sample during the next academic year. Obviously though, this will further delay the revision process.

The use of semi-structured interviews to obtain feedback from writers and managers seems to be a successful strategy. The reason for some tentativeness in this statement is that this part of the evaluation has faced a temporary administra tive obstacle. Nearly all Stage I and 2 writers have been interviewed and both interviewer and interviewee have commented favourably on the process. The problem, however, is that the evaluators cannot find an audio [dictaphone] typist to type transcripts from the interview recordings. Apparently audiotypists are a rarity on the island of Penang! If the recordings of interviews are not transcribed and entered along with the other evaluation data soon, a major breakdown in the plan will occur. This is because there will be a “bottleneck” with all the student feedback data coming in to be processed at the same time. Tutors’ open ended reports are now being received by the evaluators. No attempt has yet been made to process these. The evaluators hope that these reports will not be difficult to process and that they will subsequently prove valuable to the evaluation, given that the original intention was to survey tutors rather than request them to submit open ended reports.

Formative evaluation is an important part of any developmental program aimed at producing good learning materials. This is especially so in distance education because the materials are not only meant to provide the prescribed course content, but also to facilitate the learning of that content. Furthermore, distance learning materials tend to be given a relatively long shelf-life; they need to be of good quality if they are going to be effective learning instruments over time.

USM’s major and somewhat ambitious program of redeveloping all of its distance learning courses includes plans for evaluating the new course materials. It is nevertheless a low budget operation and accordingly does not have the time or resources to undergo developmental testing of materials before student use. Improvement of USM’s materials depends fundamentally on the course writers, but the incentive for these persons to revise their work will come from a number of sources, including the evaluation feedback.

Because the USM evaluation has a formative role and because its purpose is to provide meaningful feedback to course writers, the evaluation design chosen uses qualitative, descriptive-interpretive methodologies. The evaluators do not judge the writers’ work but instead process and communicate the judgements of others, the developers and users of the materials. By operating in this manner, and by attempting to obtain highly specific feedback relating to the value of the materials, the evaluators hope to be able to convey information to the writers which the latter will accept, understand, and most importantly, act upon.

A considerable amount of progress has been achieved with the USM evaluation in less than 12 months. Some of the plans, however, have had to be modified and compromises made. Nevertheless, the design is confirmed, data collection instru ments developed, and the system is operational. Even allowing for further difficulties, the evaluation is progressing. The evaluators can expect to be able to produce usable reports within a reasonable time. Whether or not the evaluation results are translated into action by the writers is another matter yet to be faced.

In formulating the evaluation design, the evaluation team has given prime consideration to its effect upon course writers. They have tried to avoid problems that other evaluations have suffered in which course authors have been reluctant to accept evaluation. At USM the edisi awal scheme and the official commitment by the university to major improvement of its distance learning materials seem to provide grounds for optimism that writers will improve their course materials. Interviews with writers have revealed a genuine willingness on their part to receive comprehensive evaluative feedback. More time still must elapse before an assessment can be made of the effectiveness of the university’s official incentive scheme, the evaluation system itself, and, more particularly, the extent to which the evaluation is contributing to course materials improvement.

In writing this paper, I acknowledge the joint efforts of my evaluation team colleagues: G. Dhanarajan, Molly Lee, Kim Phaik Lah, Zainal Ghani, A. Lour dusamy, and Hamidah Ismi.

Chafin, C.K. (1981, Spring). Toward the development of an ethnographic modelfor program evaluation. Current educational development research, 14(1), 3-6.

Chambers, E. (1983). Developmental testing and the course writer. Institutional research review, No. 2 (pp. 51-55). Milton Keynes: Open University.

Feasley, C.E. (1983). Serving learners at a distance: A guide to program practices. Washington, D.C. (ASHE-ERIC Higher Education Research Report No. 5).

Henderson, E., Kirkwood, A., Mayor, B., Chambers, E., & Lefrere, P. (1983). Developmental testing for credit: A symposium. Instutitional research review, No. 2 (pp. 39-59). Milton Keynes: Open University.

Kirkup, G. (1981). Evaluating and improving learning materials: A case study. In F. Percival & H. Ellington (Eds.), Aspects of educational technology, Volume XV: Distance learning and evaluation (pp. 171-176). London: Kogan Page.

McCormick, R. (1976). Evaluation of Open University course materials. Instructional Science, 5(3), 189-217.

Morgan, A. (1984). A report on qualitative methodologies in research in distance education. Distance Education, 5(2), 252-267.

Nathenson, M., & Henderson, E.S. (1980). Using student feedback to improve learning materials. London: Croom Helm.

Parlett, M., & Hamilton, D. (1972). Evaluation as illumination. In M. Parlett & G. Dearden (Eds.) (1981), Introduction to illuminative evaluation: Studies in higher education (pp. 9- 29). U.K.: University of Surrey, Society for Research into Higher Education.

Scanlon, E. (1981). Evaluating the effectiveness of distance learning: A case study. In F. Percival & H. Ellington (Eds.), Aspects of educational technology, Volume XV: Distance learning and evaluation (pp.164 -171). London: Kogan Page.

Stake, R.E. (1967). The countenance of educational evaluation. Teachers College Record, 68, 523-540.