VOL. 26, No. 2

Problem-based learning (PBL) is an instructional strategy that is poised for widespread application in the current, growing, on-line digital learning environment. While enjoying a track record as a defensible strategy in face-to-face learning settings, the research evidence is not clear regarding PBL in on-line environments. A review of the literature revealed that there are few research studies comparing on-line PBL (oPBL) to face-to-face PBL, and, in these, findings have been mixed. This study is a combined meta-analytic and qualitative review of the existing research literature comparing oPBL to face-to-face PBL. The study’s aim is to:

This review used a mixed methods strategy, combining a meta-analysis with a qualitative analysis of the studies that met inclusion criteria. An overall effect size was found to be slightly in favour of oPBL in terms of student performance outcomes. The qualitative analysis revealed relationships between established concepts of learning. The observations in this systematic review help reduce uncertainty about the robustness of PBL as in instructional strategy delivered in the online environment.

L’apprentissage par problèmes (APP), aussi dénommé apprentissage par résolution de problèmes (ARP), est une stratégie pédagogique qui est appelée à se répandre dans l’environnement actuel et toujours croissant de l’apprentissage en ligne. Alors que les évaluations antérieures démontrent que cette stratégie est défendable dans les situations d’apprentissage en face à face, les résultats d’études scientifiques ne sont pas clairs en ce qui a trait à l’APP dans les environnements en ligne. Une revue de la littérature a révélé qu’il y a peu d’études qui comparent l’APP en ligne (eAPP) à l’APP en face à face (APP) et que celles qui ont été réalisées arrivent à des résultats mitigés. La présente étude est une revue systématique de la littérature scientifique comparant l’eAPP à l’APP en face à face. Les objectifs de l’étude sont de :

Pour réaliser cette revue, nous avons eu recours à une stratégie de méthodes mixtes, combinant une méta-analyse et une analyse qualitative des études qui satisfaisaient aux critères d’inclusion. Nous avons observé, dans l’ensemble, que l’ampleur de l’effet penchait légèrement en faveur de l’eAPP au niveau des résultats de la performance des élèves. L’analyse qualitative a révélé des liens entre les concepts d’apprentissage bien établis. Les remarques formulées dans cette revue systématique aident à réduire l’incertitude au niveau de la robustesse de l’APP en tant que stratégie pédagogique utilisée dans un environnement en ligne.

Problem-based learning (PBL) is an empirically supported instructional strategy in which students are presented with real-life complex problems and are required to generate hypotheses about the causes of the problem and how best to manage it (Barrows, 1998). PBL is distinctive in that the activities are student-centered, as learners assume responsibility for their own learning, and the problems require students be self-directed as they search for the information needed for problem-resolution (Barrows, 1998, 2002). When PBL is implemented in instructional programs, and in particular professional programs, the learning outcomes have been so favourable, that one author has characterized PBL as the most complete and holistic instructional strategy (Margetson, 2000). PBL research increased considerably when there was a flurry of interest to adapt PBL to electronic learning environments (Fischer, Troendle, & Mandl, 2002; Steinkuehler, Derry, Hmelo-Silver, & Delmarcelle, 2002). Findings have been mixed comparing the achievements of face-to-face PBL and online-PBL learning. In this era of accountability, where programs must be defensible in terms of learning outcomes in order to be implemented, the need for a systematic review to determine how effective oPBL is has evolved.

The online learning environment has been the subject of much research due to a long-standing debate about the delivery of instructional strategies and the impact on learning outcomes (Clark, 1983, 1994; Kozma, 1994; Merisotis, 1999). The controversy rests on the degree to which the electronic delivery of course material affects the knowledge acquisition and learning achievements of students. Within the last few years, two meta-analyses have aggregated the research literature on computer-assisted learning and calculated an estimate of the effect of technology on student achievement (Bernard et al., 2004; Tamim, 2009). Results of both studies have reported favourable trends on the effects of on-line and digital technology, respectively, across an array of learning strategies, and provided indirect evidence that current technology may be in a position to be circumscribed as an independent variable that can affect course material.

While there is a developing understanding of the effect of technology on learning environments in terms of the impact of distance-learning on traditional instructional strategies (Bernard, et al., 2004; Tamim, 2009), there is no clear understanding of the effect of technology on PBL. Further, there is a paucity of research literature providing guidance on what, if any, provisions should be made for transferring existing PBL instructional strategies to an electronic and/or digital environment and the instructional factors to consider when transferring learning strategies across modes of delivery.

Background

A longstanding debate has focused on how the digital delivery of online programs impacts generic instructional strategy. This debate also extends to questions regarding the impact digital delivery of online programs has on communication between learners and instructors (Amchichai-Hamburger, 2009; Joy & Garcia, 2000; Merisotis, 1999). In 2004, Bernard, Abram and Lou, conducted a meta-analysis of distance learning and found a slight advantage for interactive synchronous (online, real time) distance learning over face-to-face learning (Bernard, et al., 2004). More broadly, Tamim in 2009, conducted a meta-analysis looking at the effect of computer technology on face-to-face learning in formal settings and found a favourable effect of technology on students’ achievement (Tamim, 2009). This was important in circumscribing technology as an independent variable.

While there is a developing understanding of the effect of technology on face-to-face learning environments and of the impact distance-learning has on traditional instructional strategies, there is no clear understanding of the impact of technology on PBL and whether the impact is favourable in terms of learning outcomes. While studies comparing students’ performance of oPBL and face-to-face PBL are accruing in the research literature, the findings of these studies have been mixed. The purpose of this inquiry is to systematically review studies that examine the impact oPBL has on student achievement and to determine if the evidence favours oPBL outcomes over face-to-face outcomes.

One imperative is drawn from the PBL literature, and in particular, from three meta-analyses measuring the effectiveness of face-to-face PBL. This imperative is that the assessment of students should align with the instructional strategy (Belland, French, & Ertmer, 2009; Swanson, Case, & van der Vleuten, 1991), and thus, to attain methodological rigour, the studies in a meta-analysis should be aggregated on the basis of alignment of the assessment strategy of students (Albanese & Mitchell, 1993; Dochy, Segers, Van den Bossche, & Gijbels, 2003; Vernon & Blake, 1993). In the case of oPBL, a testing approach that measures problem-solving ability is most methodologically aligned, as the assessment targets what was delivered in the module and because other evaluation parameters may not be an accurate reflection of the curriculum’s influence on students’ primary gains and achievements.

Taxonomy and Definitions of PBL and oPBL

There are many variations in the definition and operationalization of PBL. For the purposes of this study, the key components that define PBL as an instructional strategy are as follows:

For the purposes of this study, and following the work of An and Reigeluth (2008), oPBL is defined as:

Literature Search Strategy

A wide variety of electronic and print resources were reviewed in order to identify suitable studies for inclusion in this meta-analysis. The search of databases included: ASSIA (Applied Social Sciences Index and Abstracts), Canadian Thesis Portal, CINAHL, CSA, Dissertation Abstracts International, EBSCOhost, Education Index, ERIC, Illustra, Informaworld, LISA (Library and Information Science Abstracts), Medline, Physical Education Index, Proquest, PsycLIT, Scholar’s Portal, and Social Sciences Citation Index. In addition, the reference sections of the studies collected were reviewed in order to identify other potentially relevant research. Finally, in addition to the above databases, Google Scholar and the Internet were searched with variations of the search terms listed below (Table 1).

Table 1. Database Searches

| Database | Subject/Mesh Headings (Terms) and Keywords/phrases | Command |

| CINAHL | Problem Based Learning [Subject Heading] OR problem solving system OR learning, active OR active learn OR problem based curicul* OR curricul*, problem based OR learn*, problem based OR problem based learn |

AND |

Computers and Computerization+[Subject Heading] OR Internet +[Subject Heading] OR Programmed Instruction [Subject Heading] OR Computer Assisted Instruction [Subject Heading] OR Education, Non-Traditional [Subject Heading] OR asynchronous OR programmed learn OR self-instruction program OR computer assisted learn OR distance learning or education, distance |

AND |

|

| Limits of publication type: clinical trial, research and systematic review. | ||

| Medline | Problem Based Learning [Medical Subject Heading] OR problem-based learning OR learning, problem-based OR curricul$, problem-based OR active learning OR learning, active OR problem solving system$ OR problem-oriented learning |

AND |

| Education, Distance [Medical Subject Heading] OR Computer-Assisted Instruction [Medical Subject Heading] OR Internet [Medical Subject Heading] OR expProgrammed Instruction as Topic OR Computer-Assisted Instruction$ OR self-instruction program$ OR programmed learning OR digital$ OR asynchronous Limits of publication type: clinical trial, comparative study, controlled clinical trial, evaluation study, meta-analysis, multicenter study, randomized controlled trial. |

AND |

|

| ASSIA (Applied Social Sciences Index and Abstracts), Dissertation Abstracts International, Education Index, ERIC, Illustra, LISA (Library and Information Science Abstracts), Physical Education Index, and Proquest PsycLIT, Social Sciences Citation Index, CSA/Proquest; EBSCOhost/ Informaworld; Scholar’s Portal (Canadian database), and Canadian Thesis Portal |

Above terms in their entirety including the addition of problem first instruction. | |

| Hand searching and Grey literature |

Above terms in their entirety including the addition of problem first instruction. |

The criteria that determined whether a research study qualified for inclusion in the meta-analysis were as follows:

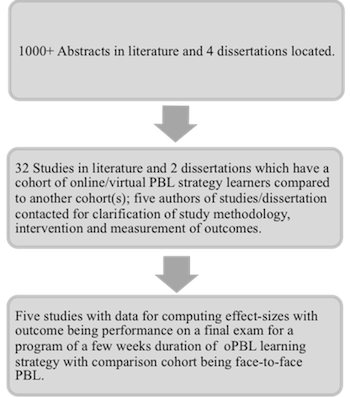

Figure 1: Search results

Over a thousand citations were identified in the search process (Figure 1). Further application of the criteria for inclusion reduced the number of citation to 32 studies and 2 dissertations (Figure 1). In several cases, clarification was sought from the authors of the studies from this shortened list to determine the exact nature of the student achievement testing/assessment instruments. Taking into account the replies received, the list of studies was narrowed down to five studies (Table 2) in order to conform to the robust inclusion criteria outlined above.

Statistical Calculations

In this study, effect size was calculated by taking the mean difference between two groups, (one group of students received the learning strategy intervention, the second control group of students were offered a standard learning strategy) divided by the pooled group variance. The study effect sizes were then weighted and pooled with a greater weighting given to larger studies. The outcome was a measure of the effectiveness of the intervention (Coe, 2007).

Effect Size

All but one of the studies located (see Table 2) permitted comparing means of the intervention and control arms directly; the Gursul and Keser study differed in that it reported results by the mean ranking by groups (Mann-Whitney U statistic) which could be converted by formula to effect size (Gürsul & Keser, 2009). Following published guidelines for conducting a meta-analysis (Lipsey & Wilson, 2001; The Cochrane Collaboration, 2002; What Works Clearinghouse, 2008), and using the software Review Manager (RevMan 5, by The Cochrane Collaboration), numerical and statistical data were extracted in order to calculate effect sizes (Cohen’s d) including conversion of the Mann-Whitney statistic to effect size with 95% confidence interval estimates. Both random and fixed models were fitted, and for the latter, a weighted mean effect size was computed by weighting the effect estimate for each study by the inverse of its variance. The precision of each effect estimate was determined by using the standard error of the mean to calculate the 95 % confidence interval for each effect. MetaAnalyst Beta 3.13 (Wallace, Schmid, Lau, & Trikalinos, 2009) was used to generate a cumulative effect size plot as a function of year of publication of the study, since this has correlation with reported effectiveness of distance education with progressive subtle changes in synchronous/two-way online interactions implemented over time (Machtmes & Asher, 2000).

The heterogeneity of the effect size distribution (the Chi Square Test) was calculated to indicate the extent to which variation in effect sizes could not be explained by sampling error. This was cross-checked by comparing the plots fitted with the fixed and random effects models (Shadish & Haddock, 1994). Publication bias was assessed by inspecting a Funnel Plot (Figure 4) for symmetry (Copas & Shi, 2000). Finally, a cumulative meta-analysis (Figure 5) was conducted to see how the magnitude of effect size changed with publication year (Rosenberg, Adams, & Gurevitch, 2000).

Qualitative Analytic Strategy

A qualitative analysis exploring the factors that may account or explain the effectiveness of oPBL over PBL was conducted on the five meta-analysis studies. The aim of this qualitative analysis was to identify and understand the key factors and/or the interactions among the factors that may provide a phenomenological explanation of how and why oPBL may be an effective learning strategy for students (LeCompte & Preissle, 1992; Ray, 1994; Van Manen, 1991). NVivo 8 (QSR International Pty Ltd., 2008) was used to conduct a line-by-line coding and categorization of the qualitative data within the studies’ Method, Findings, and Discussion sections (Charmaz, 1983; Hsieh & Shannon, 2005; Strauss & Corbin, 1990). Identified Codes were sorted into categories (Creswell, 2002; Seidel, 1998). To test the prevalence of the categories, qualitative data were transformed into quantitative data (Caracelli & Greene, 1993). These categories underwent a data reduction and were organized into categories a second time (Caracelli & Greene, 1993). However, count was not the only factor considered as the categories and codes were reorganized into a narrative. Codes that may have been considered through the counting method as “outliers” where included in the graphical representation (Maxwell & Loomis, 2003) of the factors that contribute to an explanation of oPBL’s effectiveness, as they provided deeper insight and understanding into the level of interplay between numerous factors that contribute to this current understanding of why oPBL is effective.

Summary of Located Studies

From the extensive literature search, five studies were identified as potentially meeting the meta-analysis inclusion criteria. The studies were inspected to ensure that test-scores/assessments included problem solving and critical thinking situations (Table 2) to ensure that the assessment strategy aligned with the problem-based instructional strategy (Segers, et al., 1999; Swanson, et al., 1991). Inspection of the data revealed narrow standard deviations around the means for final test scores; this suggested a consistent understanding of the course material with little variation to be attributed to factors other than the instructional strategy

Three of the five studies (Bowdish (2003), Dennis (2003), and Luck (2004)), which met the inclusion criteria for this study, had test score information expressed in percentages. In the Gursul and Keser (2009) study, the Mann-Whitney U test was used to analyse the test scores expressed as average rank of achievement scores for each group. These results were converted to an effect size as outlined in Table 2 (Gürsul & Keser, 2009). In the Brodie study (2009), the test scores had to be transposed from a frequency histogram (Brodie, 2009). The represented frequencies were adjusted for the students who did not complete the course and/or those students who did not pass or did not have a precise grade specified. Of note, the total number of students who either failed or did not have a final grade specified the Brodie (2009) study was 10% of total participants (7% online; 3 % face-to-face). In comparison, the attrition rate of 10% could be viewed as very modest in contrast to other data in the literature, where rates can be as high as 50% (Carr, 2000; Chyung, 2001).

Table 2. Study Summary

Study |

Year |

Publication Type |

Size of intervention group (n) |

Size of control group (n) |

Effect Size (d) |

Standard Error of d |

Bowdish |

2003 |

Journal |

56 |

56 |

-2.00 |

2.542 |

Dennis |

2003 |

Journal |

17 |

17 |

-1.47 |

1.682 |

Luck |

2004 |

Journal |

9 |

17 |

3.20 |

4.462 |

Brodie |

2009 |

Journal |

188 |

116 |

0.57 |

1.214 |

Gursul |

2009 |

Journal |

21 |

21 |

0.72 |

0.396 |

TOTAL number

|

291 |

227 |

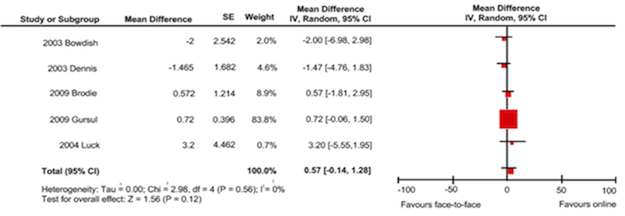

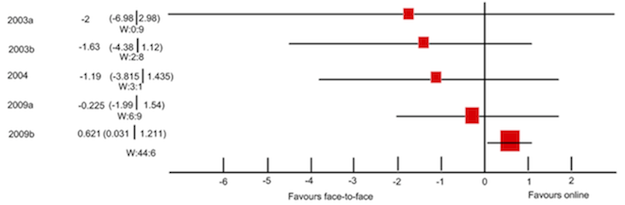

Figure 2: Meta-analysis of Online PBL vs. Face-to-face PBL- Random Effects Model

Figure 3: Meta-analysis of Online PBL vs. Face-to-face PBL – Fixed Effects Model

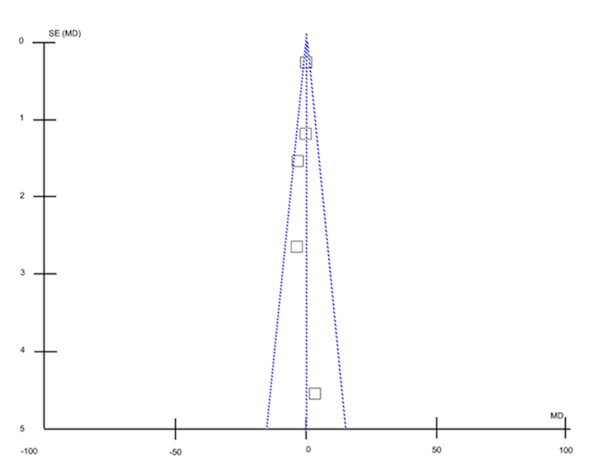

Figure 4: Funnel Plot for Assessment of Publication Bias

Figure 5: Cumulative Meta-analysis

Heterogeneity of Effect Size

The Chi-squared test commanded a p value of 0.56, which does not indicate heterogeneity using a p cut-off value of 0.10 (Figure 1). This cut-off value of p is the recommendation of the Cochrane Collaboration for their software program for small sample sizes (The Cochrane Collaboration, 2002). With such a small number of studies, the test is not considered very reliable at detecting heterogeneity (Shadish & Haddock, 1994). This is seen in the fact that fitting the data with both fixed-effects and random effects model yielded identical point estimates. For the purposes of this study, a conservative p cut-off of 0.10 was utilized as the total number of research participants was low (The Cochrane Collaboration, 2002).

Publication Bias

In order to assess publication bias, a funnel plot was generated whereby an intervention effect was plotted against the measure of study size for each study (Figure 4). For this graphical test, it is desirable to obtain the outline of an inverted funnel with symmetry. When symmetry is lacking, publication bias cannot be ruled out. Using the software Review Manager (RevMan 5, by The Cochrane Collaboration), two of the five studies aligned themselves on the vertical line where the pooled estimate from the meta-analysis lies on the x axis (Figure 4). The other studies more or less fell in the plane of the vertical line. The plot is inconclusive for publication bias upon visual inspection.

The likelihood of the meta-analysis outcomes changing with the inclusion of additional studies was determined by the calculation of Orwin’s fail-safe number (Orwin, 1983). This is the number of additional studies with zero net effect required to reduce the mean effect size (d) to a minimum meaningful value (dc). As a general rule, d = 0.2 is a small effect (Cohen, 1969). The parameters involved in this calculation are represented in Table 3. The additional number of studies required to render the mean effect size to a minimum meaningful value was found to be nine. The interpretation of this number of additional studies in the context of publication bias is that it would take almost twice as many additional studies to render the mean effect to dc. The amount of publication bias in these five original studies is likely low.

Table 3. Orwin’s Fail-safe Number

Parameter |

Number of Weighted Effect Sizes |

Weighted Mean Effect Size |

Criterion Effect Size Level |

Orwin’s Fail-safe Number |

Problem Solving |

5 |

0.57 |

0.2 |

9 |

Effect Size

Effect sizes of the individual studies for both fixed and random effects models (see Figures 1 and 2) ranged from medium (in one case) to large, with a Cohen’s d of greater than 0.8 in the remaining four cases (Louis & Zelterman, 1994). In two of those studies, the control group (face-to-face PBL) was favoured, and in three of the studies, the intervention group (oPBL) was favoured.

Cumulative Effect Sizes

The cumulative effect size as a function of publication year showed a distinct trend from originally face-to-face PBL being favoured, towards oPBL being favoured as a function of time (Figure 3). This trend occurs in a decade in which there was a vast amount of published research that would better inform subsequent studies in terms of student scaffolding of learning (e.g., prompts, and other features including argumentation tools), forming and determining optimal student group sizes for problem solving, appropriate mix of synchronous/asynchronous mediation and messaging between students and faculty, and lastly, the use of hypermedia. Additional considerations which may have played a role included a change in the latter generation of students’ comfort level with technology, better technology support for students, increased numbers of faculty with experience in online environments, and fewer institutional shortfalls in the front-end funding for the online delivery of courses.

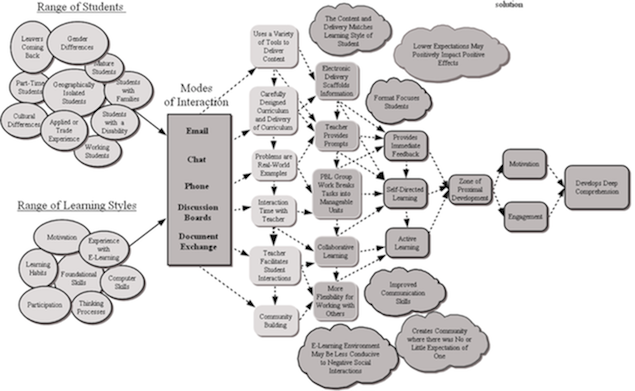

Range of Students

Examination of the five studies in the meta-analysis revealed that the instructional strategy and delivery of oPBL did not presuppose a homogenous student cohort. By means of its digital delivery, oPBL was found to be inclusive in nature as it is accessible and thus, can accommodate a vast range of students. Analysis of the method sections of the five studies found that the range of students included: leavers coming back to formal education, students with parenting responsibilities, mature students, part-time students possibly with employment/work responsibilities, geographically isolated students, students with a disability, students with applied or trade experience, and students of different genders and who are from a range of cultures (Figure 6).

Range of Learning Styles

Analysis of the five studies revealed a finding that mirrored the outcome relating to the range of students; qualitative analysis revealed that oPBL, when delivered digitally, can meet the learning needs of students who have a vast range of learning styles. Given that the online delivery of oPBL was accessible to a wide range of students, it follows that these students had a range of learning styles and foundational skills which included: differing learning habits, thinking processes, level of computer skills and comfort with technical features, experience with online learning, and, differences in their participation rates and individual motivation (Figure 6).

Modes of Interaction

Communication modalities are modes of interaction that are used to accomplish the same communication tasks conducted in face-to-face learning environments. In the five meta-analysis studies, qualitative analysis revealed that oPBL was supported by five technical features that serve as modes of interaction/communication. These modes of interaction included: email, online chat, phone, discussion boards, and document exchange. One study also utilized simultaneous online lesson delivery through MSN messenger (Gürsul & Keser, 2009) (Figure 6).

Procedural Features that Enhance the Student oPBL Experience

A review of the five meta-analysis studies revealed that the digital delivery of oPBL offered students choices in the technical tools or features they could use to engage with the learning materials and their peers. Analysis revealed that the tools/features that were implemented to enhance students’ experiences with oPBL included: setting guidelines for instructors availability/interactions with students, (Gürsul & Keser, 2009), fostering awareness of and managing students’ behaviours (Luck & Norton, 2004), and the use of a “Student Code of Conduct” to provide students with clear behavioural standards for their personal conduct (Brodie, 2009) (Figure 6).

Online PBL Learning Process

While PBL is a collaborative learning process that occurs among students, oPBL has additional features that facilitate this process for students. Analysis of the five meta-analysis studies revealed that students’ learning processes are fostered through both the Course Management Software (CMS), and the timing and frequency of instructors’ prompts and scaffolding of students. Embedded within the design of CMS programs is the ability to scaffold content for students. In the five studies, all but one used a commercially available Course Management Software (CMS) for the design and delivery of the course. In the Dennis study, the researchers designed their own course management software (Dennis, 2003). In their study, the course content was presented to students by way of directory structures and instructional displays that were designed to help the learner manage the information being distributed (Dennis, 2003).

In addition to the design and inherent scaffolding by means of the CMS structure, analysis of the five studies revealed that students were also scaffolded by their teachers. In the oPBL process, students received additional scaffolding when the instructor delivered prompts to students on an “as-needed” basis. Instructors frequently monitored the proceedings of the student groups. This monitoring may be done at intervals throughout the week, and the flexibility afforded by oPBL meant that the monitoring is not confined to a fixed, discrete discussion/tutorial time period (Figure 6).

Learning Impact

The general premise of digital learning is that it can increase the accessibility and contact between instructors and students. Analysis of the five studies revealed that in oPBL, both the features of the Course Management Software and the instructors can scaffold knowledge for students in real-time. Further, students can collaborate, support and scaffold knowledge for each other in real-time, in addition to using the text generated from synchronous chat discussions as material for reflection and learning. While online instructors were not always able to provide prompts to students in real-time, individual students and groups were able to contact the instructor if they believed they needed advice or direction. This study finds that the digital orientation of oPBL provides multiple opportunities and modes of interaction and communication for immediate feedback between teachers and students, and this may, in turn, strengthen and reinforce students’ learning outcomes, which could be a critical component in fostering students’ success (Figure 6).

Figure 6: Schematic of Qualitative Findings: The Factors that Explain Why oPBL is Effective

Why is oPBL Effective? Procedural Features that Enhance the Student oPBL Experience

The foundational cornerstone for oPBL is PBL, which is an empirically supported instructional strategy (Albanese & Mitchell, 1993). The common elements to both oPBL and PBL which make them effective are: identifying the problem, identifying the known and unknown factors, sharing tasks, collecting data, cooperative problem solving, analyzing, reporting, and presenting a solution (Hung, Jonassen, & Liu, 2008; Jonassen, 1999).

Analysis of the five studies in the meta-analysis revealed that oPBL imparts features that are above and beyond those found in the delivery of face-to-face PBL. Qualitative analysis of the five studies from the meta-analysis revealed that these studies address a vast range of students and their learning styles. Further, this study has found that the digital delivery of oPBL offers students and teachers a number of modes of interactions that can facilitate online communication and interactions. Along with the modes of interaction, this study has found that these studies implemented procedural features that enhanced students learning experiences and learning processes in oPBL. There may also be a general cumulative effect of the technical features available to students in the oPBL environment. When students have the option to choose their modes of interaction and learning, this ability to choose a learning approach or orientation may be particularly suited to their unique and individual needs. This availability of choice and alignment with individual needs may be associated with an increase in alignment of students’ learning environment and learning strategies, and thus, may reasonably be associated with an increase in students’ comfort level.

The structure and delivery of oPBL content requires strict pacing of the materials so that the students’ knowledge is scaffolded and prompts are provided to promote their learning process. This is in addition to the sequencing of the material, which is obviously a function of its ordering. The delivery of materials must be appropriately paced to ensure that students stay on schedule, and do not miss deadlines due to the elapsed time-factor inherent in asynchronous communication. For this reason, it is possible that the oPBL content may need to be more carefully designed, structured, and organized for delivery. Additionally, teachers and tutors must be sensitive to the structure of the content. The consensus building process of online collaborative groups may potentially require more time and effort than face-to-face groups, especially when teachers/tutors need to schedule mutually agreeable times for synchronous communication with students. It may well be that the curriculum materials in oPBL are qualitatively better than those used in face-to-face environments, since the materials must be selected to provide inherent scaffolding and be more amenable to prompting students.

Within PBL and oPBL, the course content is comprised of problems that are real-world problems. These problems are intentionally ambiguous, and solutions may not be immediately obvious to the students. The range of students in oPBL courses means that different students bring different aptitudes and skills to their collaborative group work. The oPBL process incites students to deduce “knowns” and “unknowns”, and this activity can reflect the range of students, their learning styles, and their skills. Given that oPBL students range from mature learners to working, part-time learners, and given that they possess a variety of skill sets and experiences that they can offer and share with their group members (Luck & Norton, 2004), the diversity of individuals and approaches focused on solving the problem, may propel the problem-resolution process at a pace that enables students to feel engaged and motivated, and may allow them to achieve the task within set deadlines.

Additionally, the notion of a “safe learning space” is an important consideration for the development of a learning community. In order for student cohorts to feel that a community is being formed and nurtured, negative interactions among students must be kept to a minimum (McConnell, 2002). Analysis of the five studies in the meta-analysis revealed that Luck and Norton found there was evidence of competitiveness in the face-to-face group, and that the instructor of the face-to-face group reported “cliquish” behavior among students,but that this was absent in the oPBL group (Luck & Norton, 2004). Further, it was found that a “Student Code of Conduct” can minimize misunderstandings and prevent negative interactions among students, and, thus, enhance students’ experiences with oPBL. In the Brodie study, students were made stakeholders in a “Code of Conduct and Responsibilities,” and students were required to revisit the Code as a group several times throughout the semester to ensure group cohesiveness and fluid interactions (Brodie, 2009). While these efforts at optimal group functioning are required for online groups, they may well outstrip efforts made in face-to-face groups, and thus may provide further explanatory power as to the heightened group functioning found in oPBL.

Task sharing and student cooperation in finding the solution to a problem are two of ten PBL sub-dimensions that can be measured and expressed in terms of achievement scores (Derry, 2005; Exley & Dennick, 2004). The Gursul and Keser study found oPBL achievement scores to be higher than face-to-face PBL scores on both the sub-dimensions of task sharing and cooperation, and that this difference was statistically significant (Gürsul & Keser, 2009). This same study also did a fine-grained analysis of students’ skills that included the PBL sub-dimension of presenting the solution to a problem (Derry, 2005; Exley & Dennick, 2004). They found that oPBL students had a greater command of the presentation of the solution to the problem versus their face-to-face counterparts; “The achievement scores of the groups in the online environment, with respect to the sub-dimensions of task sharing, cooperation in the solution of problem, feedback and presenting the solution is higher than that of the groups in face-to-face learning. This difference is statistically significant” (Gürsul & Keser, 2009).

Lastly, the unifying explanation for oPBL’s success may relate to a cumulative effect of the format. The oPBL procedural features, the inherent PBL process and the cumulative effects of scaffolding from the digital medium, collaboration with peers and management of the problem itself, comprise an umbrella which channels these factors into a format that fosters students’ success. Additional factors unique to oPBL include a learning environment with a code of conduct and a relative absence of negative social interactions, an iterative set of expectations, an increase in student comfort level as their learning environment aligns with their unique interaction and learning styles, and the fostering of a fledgling learning community where there may have been none or little expectation of one. Thus, the oPBL learning environment may be one which iteratively expands student’s learning expectations and achievement outcomes.

A limitation of this meta-analytic review relates to the issue of the surface homogeneity of the participant sample. While the sample population was homogenous in that all the students were in undergraduate programs, this grouping was not overly sensitive to the fine differences in student characteristics. For example, the Luck (2004) study acknowledged sampling issues identifying that their research participant group was part of a novel program (i.e., a B.A. Nursery Management program combining early childcare education and business management.) Luck (2004) acknowledged that this student population’s learning motivation and level of self-directedness may be atypical in comparison to other undergraduate programs and students. Further, since all the studies included in this meta-analysis were undergraduate level programs, this may limit extending the generalizations of oPBL effectiveness to graduate programs and graduate students.

Another concern relating to participant sample is that the face-to-face and oPBL study waves were not conducted concurrently. Rather, most of the studies staggered the research waves by academic year (face-to-face one year, oPBL the next). Consequently, there may been differences in the activities and the tutors guiding the problem-solving groups. These differences may have either conferred an advantage or disadvantage to the problem-solving groups, depending on the year.

Another limitation of this study is that the outcome measures were performance based and expressed as student scores. These types of outcome measures may lack sensitivity and insight into student learning because they do not provide important information about students’ learning processes, the development of understanding, and overall cognitive development – richer indicators of student success. For example, the studies do not make it possible to understand or compare indicators of students’ learning process between the oPBL and face-to-face groups, such as time spent on task, time spent using resources, and time spent engaged in the problem.

Another limitation related to the uneven reporting of the method, data, and findings within the five studies included in the meta-analysis. While data relating to the oPBL strategy was well represented, there was not a commensurate amount of qualitative data reported and discussed that related to the face-to-face strategy.

The limitations of this combined meta-analytic and qualitative study provide grounds for future research. Future studies to be included in both the meta-analysis and the qualitative analysis must strive to equally represent both quantitative and qualitative methods, data, and findings, so that exploratory analysis may be able to better generate deeper understandings of processes within and between learning strategies. For example, qualitative data reporting students’ problem with engagement, interactions, and time on task would provide deeper insight into the learning process and impact of face-to-face learning and oPBL.

In exploring the data for conducting the meta-analysis, the studies revealed narrow standard deviations around the means for final test scores. This suggests that there was very consistent attainment of understanding the course material in both oPBL and PBL. The meta-analysis revealed that oPBL students do marginally better on exam questions focused on problem-solving, compared to face-to-face PBL students.

The results of the qualitative analysis of the five meta-analysis studies revealed that there was an iterative phenomenon involving students’ perceptions of course outcomes, gains in self-efficacy, and the beneficial formation of small, online learning communities. Online PBL students would appear to be positioned to have their original expectations of potential gains positively reinforced by the end of the course.

Brian Jurewitsch is a lecturer at the University of Toronto. E-mail: jurebrid@gmail.co